Building a Distributed Step Sequencer

In this tutorial, we will use the soundworks' sync plugin we just learned to build a more advanced application: a distributed step sequencer.

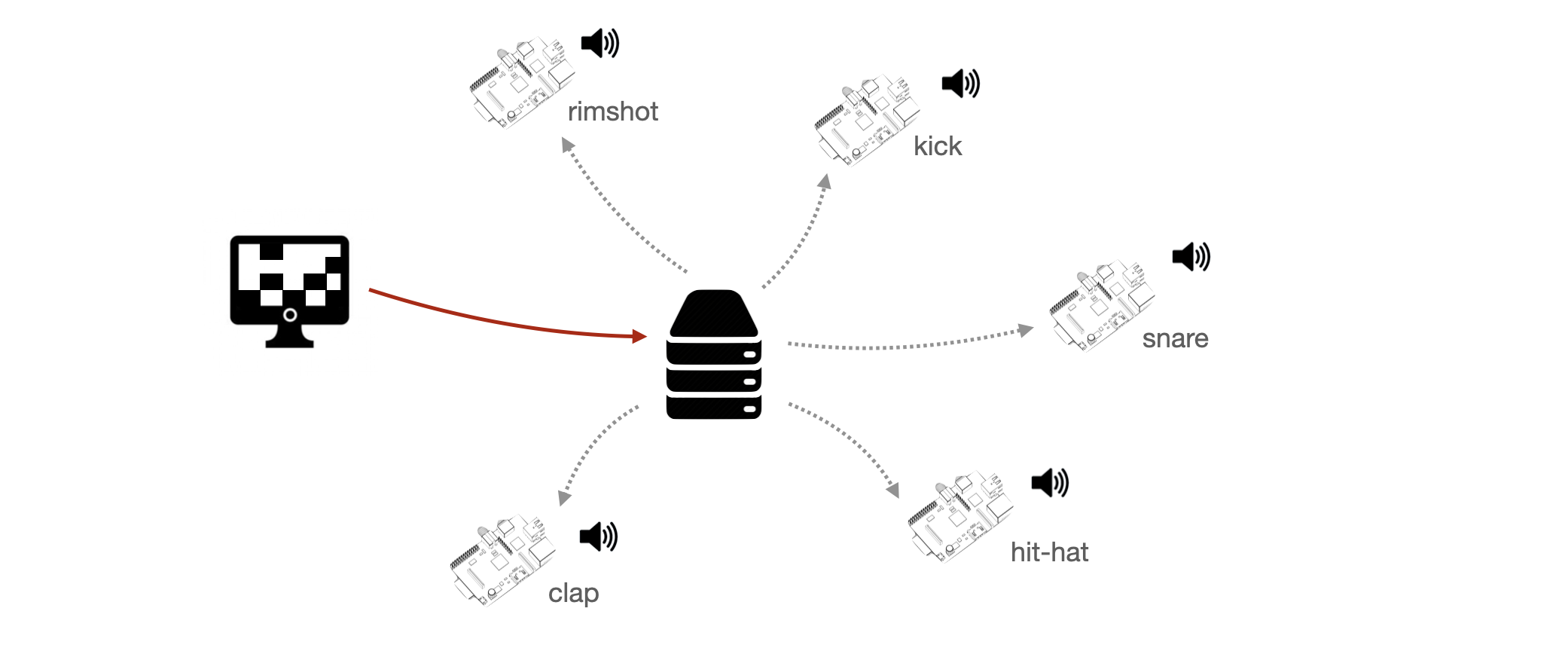

The step sequencer will be based on Node.js clients, representing each a track of our step sequencer, i.e. one the kick, one for the snare, etc. and one browser controller client to rule them all:

Along the way we will use another plugin, the checkin plugin, which allows us to assign a unique index to each clients.

Related documentation

Scaffolding application

Let's start with scaffolding our application using the soundworks wizard:

cd path/to/tutorials

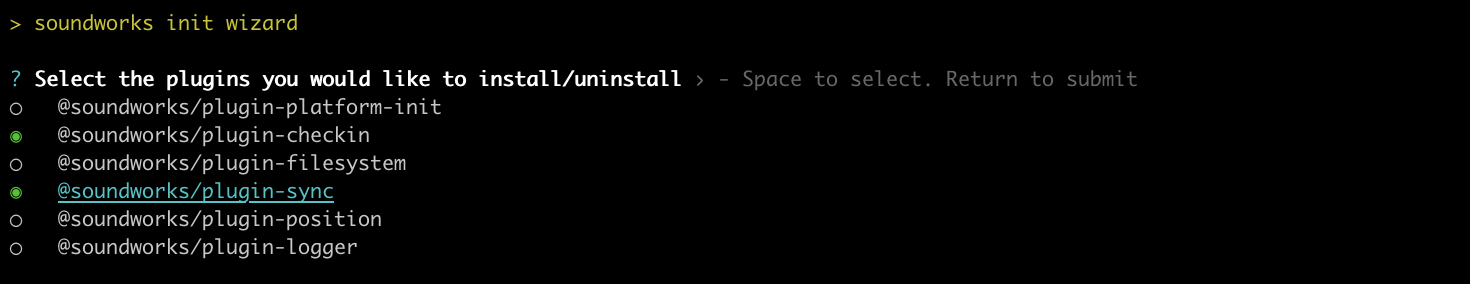

npx @soundworks/create@latest step-sequencerWhen the wizard ask to select the plugin, let's then select @soundworks/plugin-sync and the @soundworks/plugin-checkin plugins:

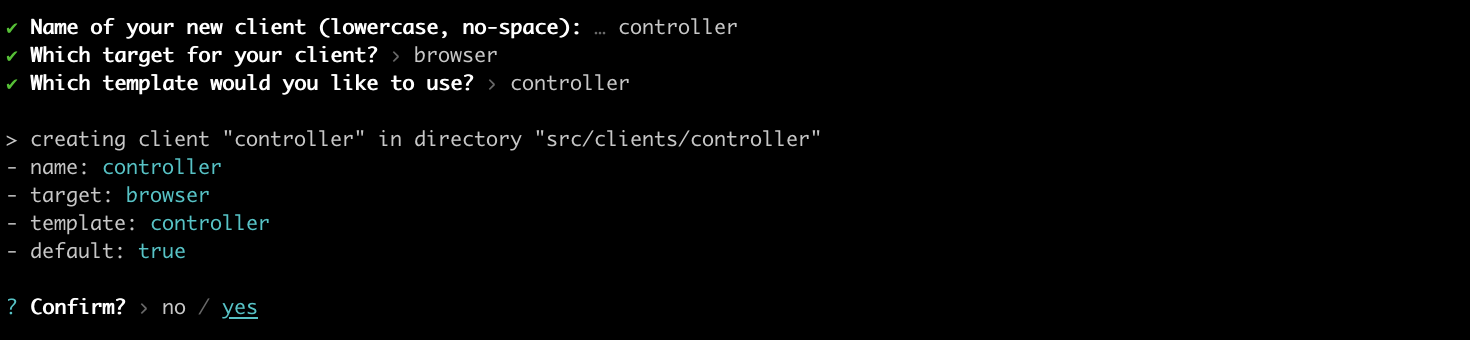

And let's define our default client as a controller:

Once done, let's go into our step-sequencer directory and install a few more dependencies we will need using npm:

cd step-sequencer

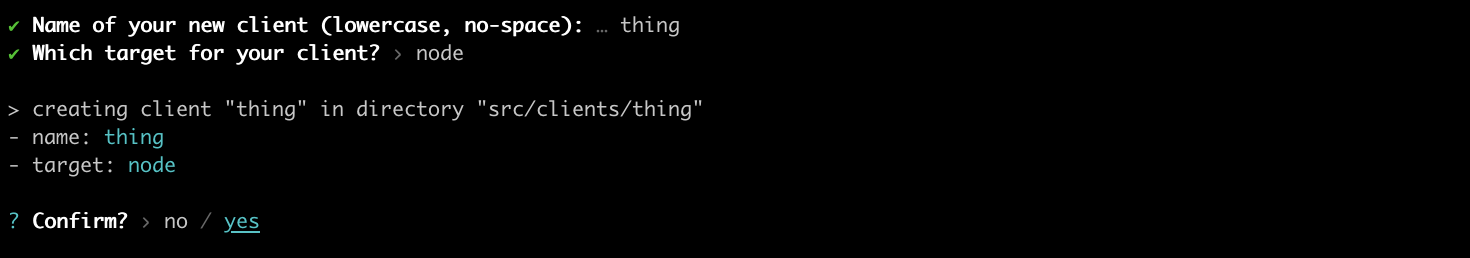

npm install --save @ircam/sc-scheduling @ircam/sc-loader node-web-audio-apiFinally, let's just create our node client, we will name thing:

npx soundworks --create-client

We can now start our server:

npm run devAnd, in different "Terminal", emulate 5 thing clients:

cd path/to/tutorials/step-sequencer

EMULATE=5 npm run watch thingTIP

To easily get the current directory in "Terminal" you can use the pwd command

Defining the global state

Now that everything is set up, let's continue with defining the different parameters that describe our application and declare them in a global state.

Indeed, we will need several parameters to control our step sequencer:

- A variable which will define if our step sequencer is running or not ("start / stop").

- A timestamp (in synchronized time) associated to the start / stop event to make sure all our clients start at the same exact time.

- A BPM value for our step sequencer.

- A score.

To keep things simple, we won't allow to change the BPM while the step sequencer is running. Indeed, having such functionality would imply to also synchronize our BPM change among every clients, which would lead to over complicate this tutorial.

Let's thus create a file named global.js in the src/server/schemas directory and translate these information into a schema definition:

// src/server/schemas/global.js

export default {

running: {

type: 'boolean',

default: false,

},

startTime: {

type: 'float',

default: null,

nullable: true,

},

BPM: {

type: 'integer',

default: 120,

},

score: {

type: 'any',

default: [],

},

};Let's then register this schema in our server:

// src/server/index.js

import { loadConfig } from '../utils/load-config.js';

import '../utils/catch-unhandled-errors.js';

import globalSchema from './schemas/global.js';

// ...

const server = new Server(config);

server.useDefaultApplicationTemplate();

server.stateManager.registerSchema('global', globalSchema); And define some default values for our tracks. Note that we will consider that our step sequencer has 5 different tracks with 8 steps each, and that we will create a branch to initialize our score in debug mode (with some values filled) according to a debug flag to ease the development:

await server.start();

// change this flag to false to empty score by default

const debug = true;

let score;

if (debug) {

// if debug, fill score with default values

score = [

[0, 1, 0, 1, 0, 1, 0, 1],

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0],

[0, 0, 1, 0, 0, 0, 1, 0],

[1, 0, 0, 0, 1, 0, 0, 1],

];

} else {

// if not debug, empty score

score = [

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0],

];

}

const global = await server.stateManager.create('global', {

score,

}); Registering the plugins

Now that our state is set up, let's install and configure our different plugins.

First let's register the plugins in the server side:

import '@soundworks/helpers/polyfills.js';

import { Server } from '@soundworks/core/server.js';

import pluginSync from '@soundworks/plugin-sync/server.js';

import pluginCheckin from '@soundworks/plugin-checkin/server.js';

import { loadConfig } from '../utils/load-config.js';

// ...

const server = new Server(config);

server.useDefaultApplicationTemplate();

server.pluginManager.register('sync', pluginSync);

server.pluginManager.register('checkin', pluginCheckin);

server.stateManager.registerSchema('global', globalSchema);And in our two "controller" and "thing" clients. Note that as we will use the checkin to get a unique index which will allow us to get a track from the score to be played, we will only register the checkin plugin on the "thing" client.

Let's start with the "controller":

// src/clients/controller/index.js

import launcher from '@soundworks/helpers/launcher.js';

import pluginSync from '@soundworks/plugin-sync/client.js';

import { html, render } from 'lit';

// ...

client.pluginManager.register('sync', pluginSync);

await client.start();Then let's install our two plugins in our "thing" clients, as you may recall in the last tutorial, in this case we will want to synchronize the audio clock, which will thus require a bit more configuration:

// src/clients/thing/index.js

import { Client } from '@soundworks/core/client.js';

import launcher from '@soundworks/helpers/launcher.js';

import pluginSync from '@soundworks/plugin-sync/client.js';

import pluginCheckin from '@soundworks/plugin-checkin/client.js';

import { AudioContext } from 'node-web-audio-api';

import { loadConfig } from '../../utils/load-config.js';

// ...

const client = new Client(config);

const audioContext = new AudioContext();

client.pluginManager.register('checkin', pluginCheckin);

client.pluginManager.register('sync', pluginSync, {

getTimeFunction: () => audioContext.currentTime,

}); Implementing the controller

Now that everything is set up, it is time to implement our controller logic.

Let's first import a few components, attach to our global state and sketch our graphical user interface:

// src/clients/controller/index.js

import { html, render } from 'lit';

import '../components/sw-audit.js';

import '@ircam/sc-components/sc-matrix.js';

import '@ircam/sc-components/sc-text.js';

import '@ircam/sc-components/sc-transport.js';

import '@ircam/sc-components/sc-number.js';

// ...

await client.start();

const global = await client.stateManager.attach('global');

// update screen on update

global.onUpdate(() => renderApp());

function renderApp() {

render(html`

<div class="controller-layout">

<header>

<h1>${client.config.app.name} | ${client.role}</h1>

<sw-audit .client="${client}"></sw-audit>

</header>

<section>

<p>Hello ${client.config.app.name}!</p> // [!code --]

<div> // [!code ++]

<div style="margin-bottom: 4px;"> // [!code ++]

<sc-transport // [!code ++]

.buttons=${['play', 'stop']}

value=${global.get('running') ? 'play' : 'stop'}

></sc-transport> // [!code ++]

</div> // [!code ++]

<div style="margin-bottom: 4px;"> // [!code ++]

<sc-text>BPM</sc-text> // [!code ++]

<sc-number // [!code ++]

min="50" // [!code ++]

max="240" // [!code ++]

value=${global.get('BPM')}

></sc-number> // [!code ++]

</div> // [!code ++]

<div style="margin-bottom: 4px;"> // [!code ++]

<sc-matrix // [!code ++]

.value=${global.get('score')}

></sc-matrix> // [!code ++]

</div> // [!code ++]

</div>

</section>

</div>

`, $container);

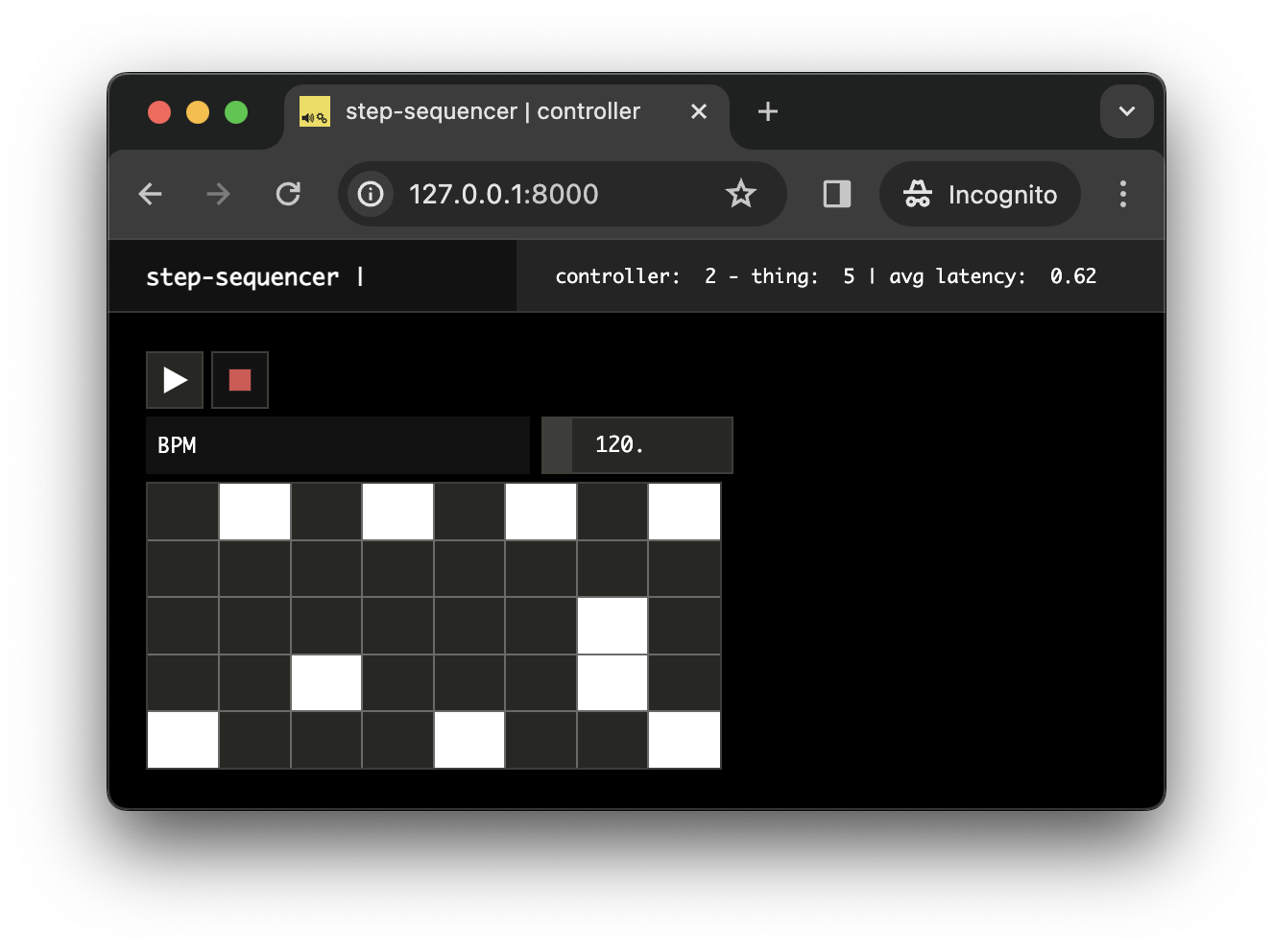

}If you open your controller in a Web browser, you should now see the following interface:

Now let's just change a bit our code to propagate the changes made in our interface in the global state:

await client.start();

const global = await client.stateManager.attach('global');

// update screen on update

global.onUpdate(() => renderApp());

const sync = await client.pluginManager.get('sync');

function renderApp() {

render(html`

<div class="controller-layout">

<header>

<h1>${client.config.app.name} | ${client.role}</h1>

<sw-audit .client="${client}"></sw-audit>

</header>

<section>

<div>

<div style="margin-bottom: 4px;">

<sc-transport

.buttons=${['play', 'stop']}

value=${global.get('running') ? 'play' : 'stop'}

@change=${e => {

if (e.detail.value === 'stop') {

global.set({ running: false });

} else {

// grab current sync time

const syncTime = sync.getSyncTime();

// add an offset to the syncTime to handle network latency

const startTime = syncTime + 0.5;

// propagate values on the network

global.set({ running: true, startTime });

}

}}

></sc-transport>

</div>

<div style="margin-bottom: 4px;">

<sc-text>BPM</sc-text>

<sc-number

min="50"

max="240"

value=${global.get('BPM')}

?disabled=${global.get('running')}

@change=${e => global.set({ BPM: e.detail.value })}

></sc-number>

</div>

<div style="margin-bottom: 4px;">

<sc-matrix

.value=${global.get('score')}

@change=${e => global.set({ score: e.detail.value })}

></sc-matrix>

</div>

</div>

</section>

</div>

`, $container);

}INFO

Note how we disabled the BPM number box when the step sequencer is running:

?disabled=${global.get('running')}to make clear to the user that the BPM can be changed only when it is stopped.

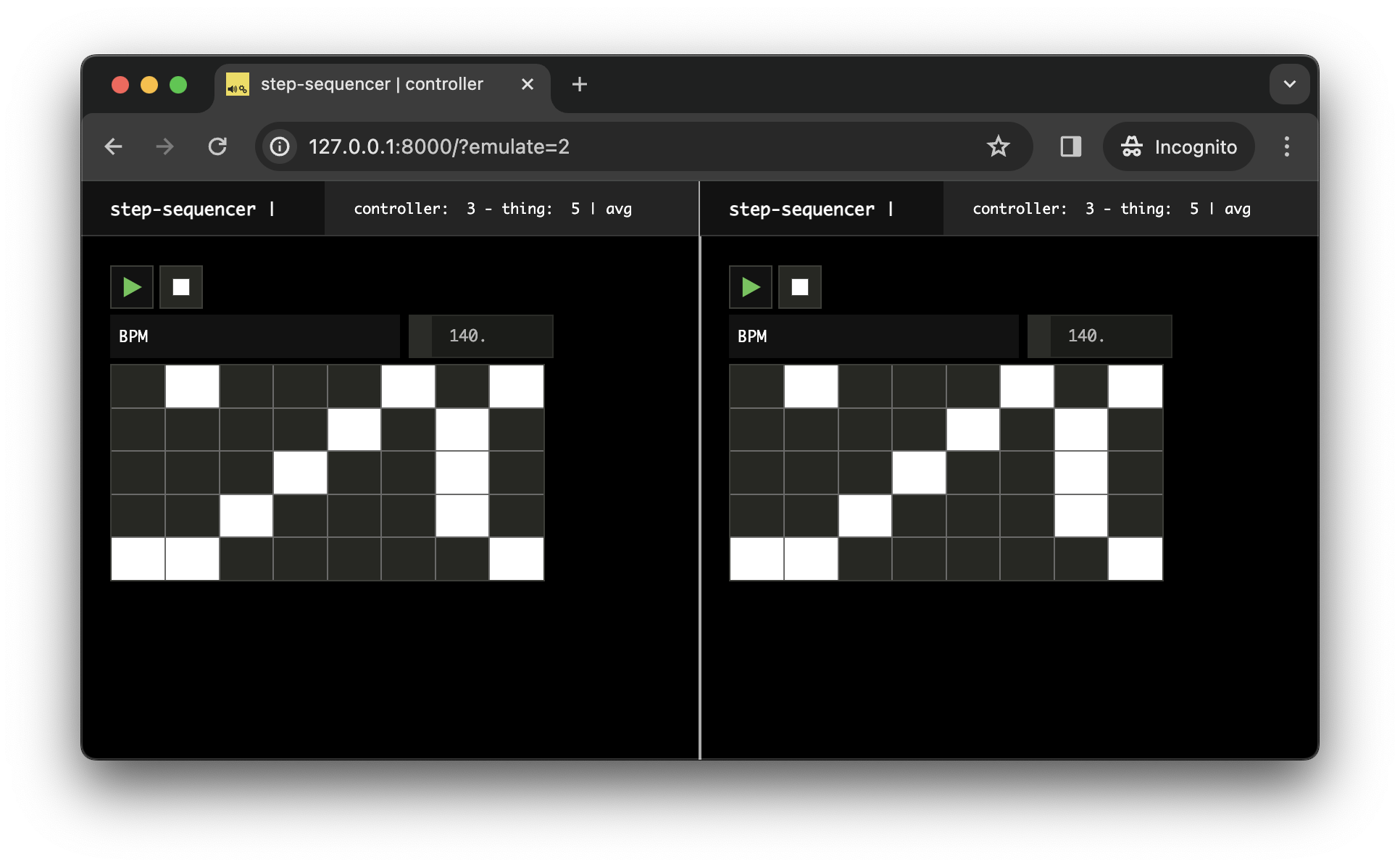

If you now open two controllers side by side, e.g. http://127.0.0.1:8000?emulate=2, you should see that the two controllers stay perfectly synchronized:

Implementing the audio engine

Now that all our controls are set up, let's go back to our thing clients to implement the audio engine and logic.

Loading some audio files

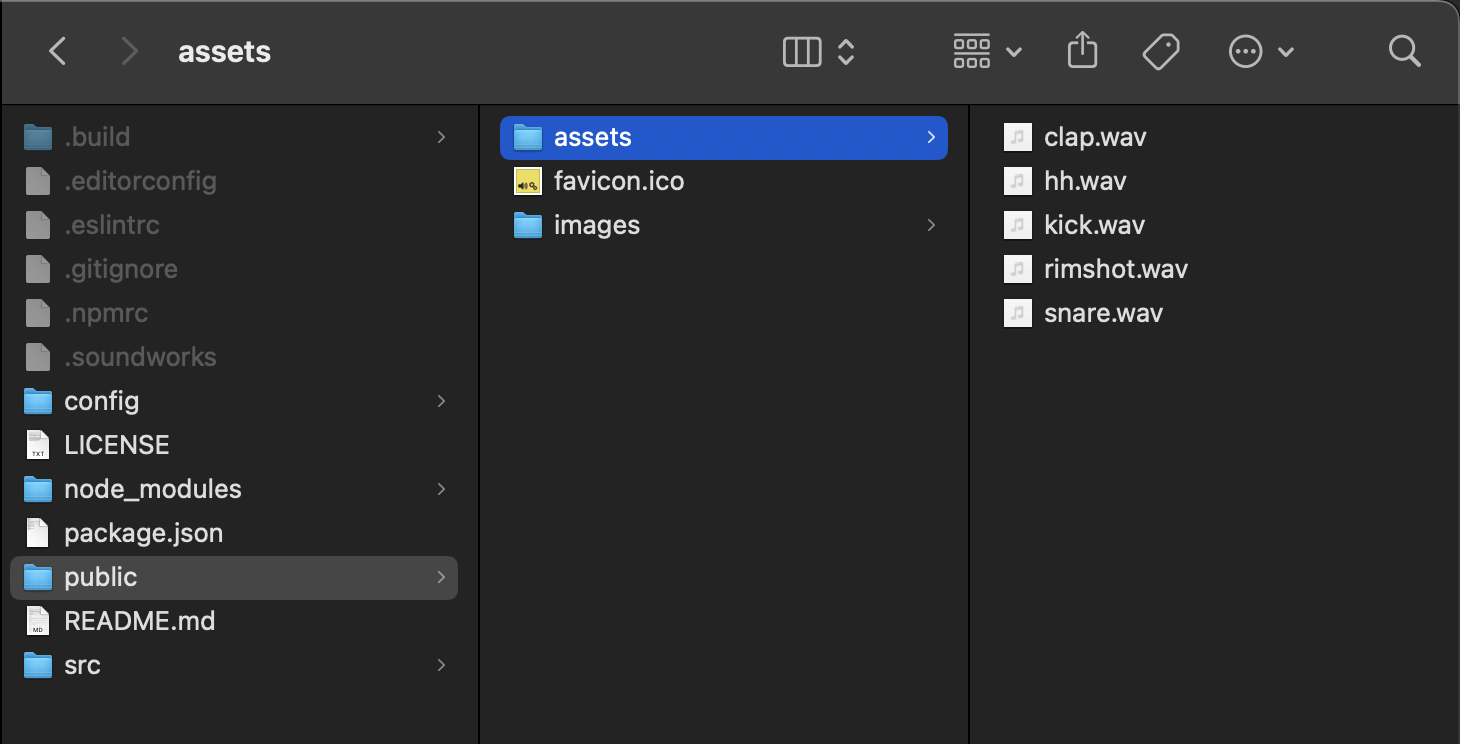

First let's add a few audio files into our project, that our step sequencer will be able to use for audio rendering. For simplicity, let's put them in the public directory.

INFO

The set of samples used in the tutorial can be downloaded here

Your file system should now look like the following:

Now let's load these file into our thing clients:

import { AudioContext } from 'node-web-audio-api';

import { AudioBufferLoader } from '@ircam/sc-loader';

import { loadConfig } from '../../utils/load-config.js';

// ...

await client.start();

const audioFiles = [

'public/assets/hh.wav',

'public/assets/clap.wav',

'public/assets/rimshot.wav',

'public/assets/snare.wav',

'public/assets/kick.wav',

];

const loader = new AudioBufferLoader(audioContext);

const audioBuffers = await loader.load(audioFiles);

console.log(audioBuffers); You should see logged into the console the loaded audio buffers.

Creating the synchronized scheduler

Then, let's create a scheduler, running in the synchronized time, i.e. scheduling events in the synchronized timeline, but able to provide us the corresponding time in the audioContext time reference:

import { AudioBufferLoader } from '@ircam/sc-loader';

import { Scheduler } from '@ircam/sc-scheduling';

import { loadConfig } from '../../utils/load-config.js';

// ...

await client.start();

// retrieve initialized sync plugin

const sync = await client.pluginManager.get('sync');

const scheduler = new Scheduler(() => sync.getSyncTime(), {

// provide the transfert function that will allow the scheduler to map from

// its current time (the sync time) and the audio time (the sync plugin local time)

currentTimeToProcessorTimeFunction: syncTime => sync.getLocalTime(syncTime),

});

const audioFiles = [

// ...

];Implementing the audio engine

Then implement a simple audio engine that will play a track from the score and an associated buffer according to a given index:

Binding all components with the shared state

Finally, let's attach to the global state and create our step sequencer engine which will react to updates of the global state: